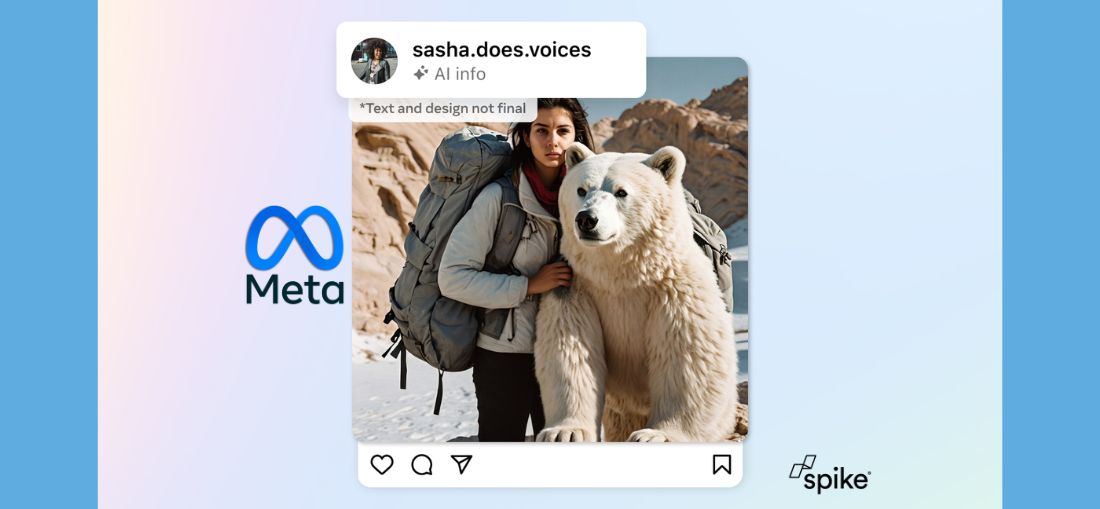

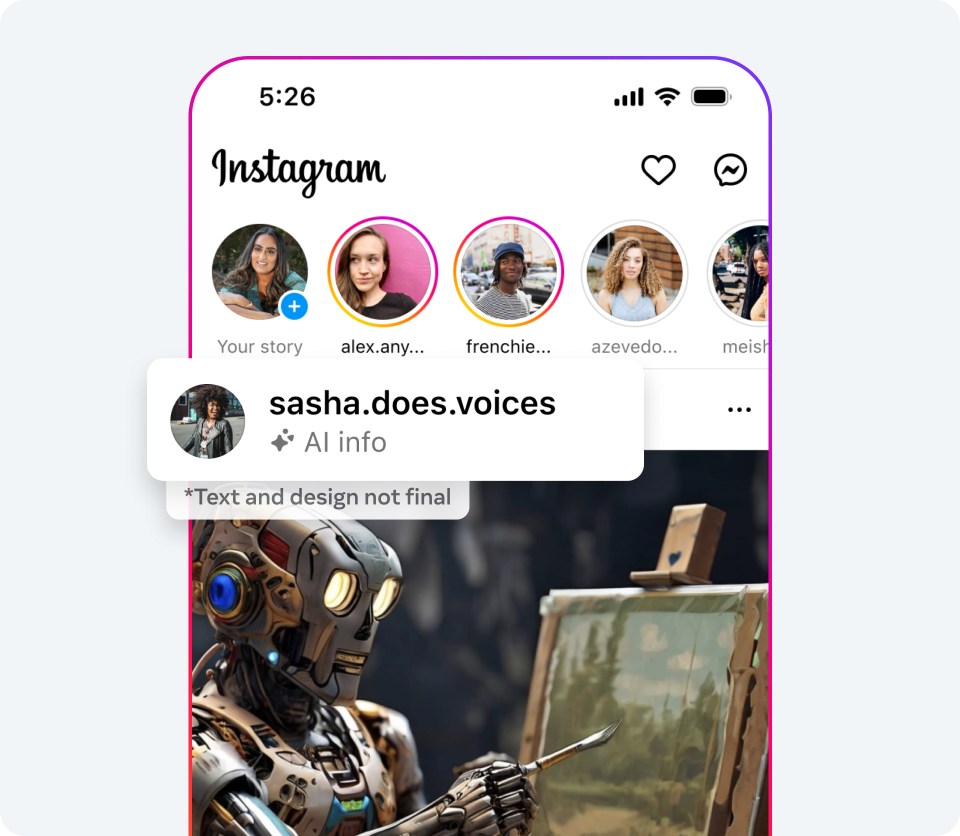

Meta, a company deeply invested in AI development, has witnessed a surge in creativity with the use of its new generative AI tools, such as the Meta AI image generator, which enables users to create images from simple text prompts. As AI-generated content becomes increasingly prevalent, Meta acknowledges the importance of transparency in distinguishing between human and synthetic content. Hence, they have initiated the practice of labeling photorealistic images created with their Meta AI feature as “Imagined with AI” to inform users about the technology’s involvement.

Understanding the significance of industry-wide collaboration, Meta has been working with partners to establish common technical standards for identifying AI-generated content. This collaboration aims to enable platforms like Facebook, Instagram, and Threads to label AI-generated images more transparently. Meta’s approach includes visible markers, invisible watermarks, and metadata embedded within image files to indicate AI involvement.

images courtesy of Meta

Additionally, Meta is collaborating with other industry players through forums like the Partnership on AI (PAI) to develop common standards for identifying AI-generated content. They are developing tools to detect invisible markers at scale and to label images created by various AI tools across different platforms.

Why is this important?

However, detecting AI-generated content in audio and video formats remains a challenge, and Meta is working towards implementing disclosure and labeling tools for such content. They are also exploring ways to automatically detect AI-generated content and enhance the robustness of invisible markers like watermarks.

Furthermore, Meta emphasises the importance of community standards and AI technology in combating harmful content on its platforms. While generative AI tools offer opportunities for innovation, Meta recognises the need for responsible development and transparency. They are committed to learning from user feedback and collaborating with stakeholders to establish common standards and ensure the responsible use of AI technologies.

In conclusion, Meta acknowledges the dual nature of AI as both a tool for innovation and a potential source of harm. They are committed to developing AI technologies transparently and collaboratively while upholding community standards to ensure a safe and responsible online environment.

Author spike.digital