An eventful year in digital ended with a bang as OpenAI took centre stage with their announcement of ChatGPT on the 30th November 2022. It is fair to say that much will be said, discussed and written about OpenAI’s advancements in the space of conversational search in 2023. The eagerly anticipated release of GPT-4 will spark a large amount of hype in the AI space.

The improvement on GPT-2 was ~100x , which is why the fourth-generation GPT is now eagerly anticipated in the AI world. Where GPT-2 was quite limited in highly specialised areas, GPT-3 went further, with tasks such as answering questions, summarising text, essay writing, language translation, and generating computer code. For this reason, many articles on the web cite another 100x improvement from GPT-3 to GPT-4 which would be immense – but isn’t substantiated by the OpenAI team, at this moment in time. However, we should most definitely be prepared for a significant improvement.

GPT-4 is nearly ready

We know from various posts on Twitter that GPT-4 has been in training since September 2022, meaning that in the shift towards AI, it is very close. That could be early in 2023 – or later on, but it is clearly on the way. We know that GPT-4 is trained on a larger dataset, improving its accuracy, robustness and overall cost efficiency.

Rumours on parameters

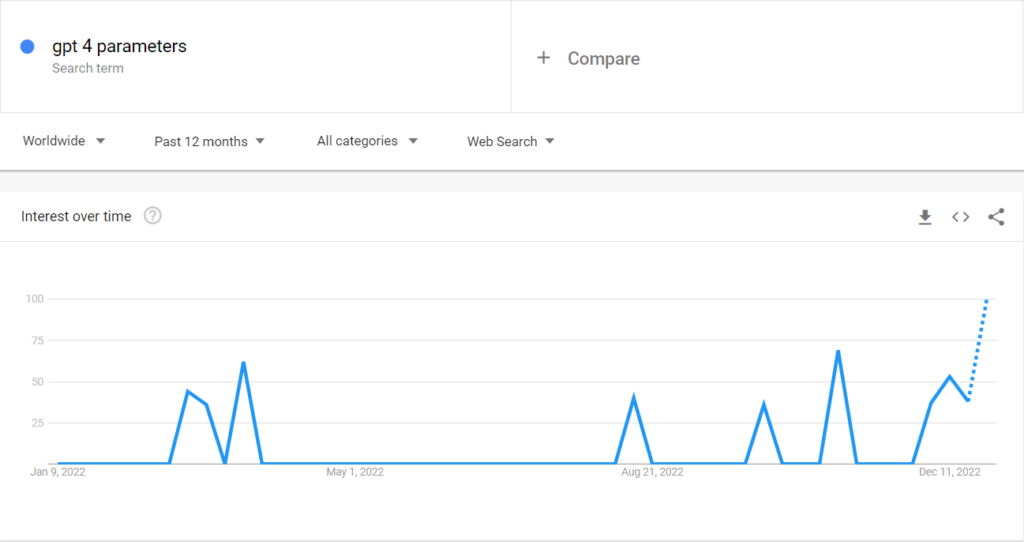

Launched in 2020, the GPT-3 language processing model draws on up to 175 billion parameters to produce text with a high degree of fluency. GPT-4 has no confirmed launch date in 2023, nor any specific number of parameters. Since 2021, there have been rumours in the AI space that GPT-4 will see a paradigm shift in the number of parameters to 100 trillion. However, a private Q&A session during April 2022 had OpenAI CEO Sam Altman deny the 100 trillion parameter rumour, but it is widely accepted that there will be a significant increase in the number of parameters. Interestingly, this area alone appears to be creating hype right now:

Rumours on multi-modal inputs

Multi-modal inputs to language processing models will transform such applications in terms of versatility and everyday language. The people at the top of the OpenAI initiative are under NDA – so it remains unclear as to whether GPT-4 would accept audio, image, and maybe even video inputs alongside text. Whilst it is possible that GPT-4 could be multimodal, there is a likelihood that it will be text-only use.

Significant investment from Microsoft

We know that OpenAI has raised over $1 billion to date from Microsoft and other backers. We also know that Open AI is looking for more funding in the coming years and projects over $1 billion in revenue by 2024.

Accelerated disruption

For many, the speed of development in language processing models is faster than previously expected. From copywriters to programmers, it is understandable how human beings could feel marginalised, as we all get to grips with the amount of change ahead of us in the coming years when it comes to AI.

Duncan Colman