Google has been busy making up time on its AI product roadmap and now, they introduce Gemma, a family of lightweight, state-of-the-art open models developed by Google DeepMind and other teams.

Google has a previous background in contributing to the open community with innovations like Transformers, TensorFlow, BERT, and more.

Gemma is designed for responsible AI development, with the name reflecting its preciousness and high quality.

Key points about the new Gemma models:

- Available worldwide in two sizes: Gemma 2B and Gemma 7B, each with pre-trained and instruction-tuned variants.

- A Responsible Generative AI Toolkit is provided to guide safer AI applications with Gemma.

- Toolchains for inference and supervised fine-tuning (SFT) are available across major frameworks: JAX, PyTorch, and TensorFlow through native Keras 3.0.

- Ready-to-use Colab and Kaggle notebooks, as well as integration with popular tools like Hugging Face, MaxText, NVIDIA NeMo, and TensorRT-LLM, simplify Gemma adoption.

- Gemma models can run on various platforms, including laptops, workstations, and Google Cloud, with optimization for different hardware platforms such as NVIDIA GPUs and Google Cloud TPUs.

- Terms of use permit responsible commercial usage and distribution for all organisations.

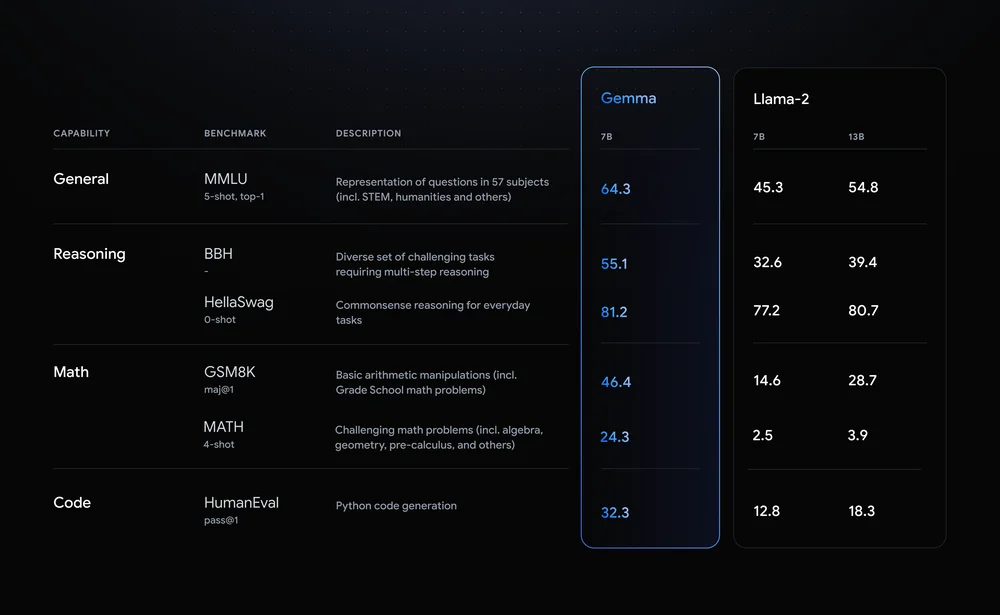

Gemma models achieve state-of-the-art performance, for their sizes, compared to other open models and can run directly on developer devices. They surpass larger models on key benchmarks while maintaining safety and responsibility standards.

images courtesy of Google

Gemma is designed with Google’s AI Principles in mind, ensuring safety and reliability. Techniques like automated data filtering and extensive fine-tuning and reinforcement learning from human feedback (RLHF) are employed to align models with responsible behaviors.

To aid developers in building safe AI applications, Google provides the Responsible Generative AI Toolkit, offering methodologies for safety classification, model debugging, and guidance based on best practices.

Gemma supports fine-tuning on custom data for specific application needs and is compatible with various frameworks, tools, and hardware platforms. Integration with Google Cloud’s Vertex AI offers MLOps toolsets for easy deployment and optimization.

For developers and researchers, Google offers free access to Gemma through platforms like Kaggle and Colab, along with credits for Google Cloud usage to accelerate projects.

Why is this important?

In a busy month for the tech giant – Gemma represents Google’s commitment to advancing AI responsibly and making cutting-edge models accessible to developers and researchers worldwide.

Google has made all the information and guides to Gemma available on ai.google.dev/gemma.

Author spike.digital